Why memory chips are the new frontier of the AI revolution

Memory Chips at the Core of the AI Boom

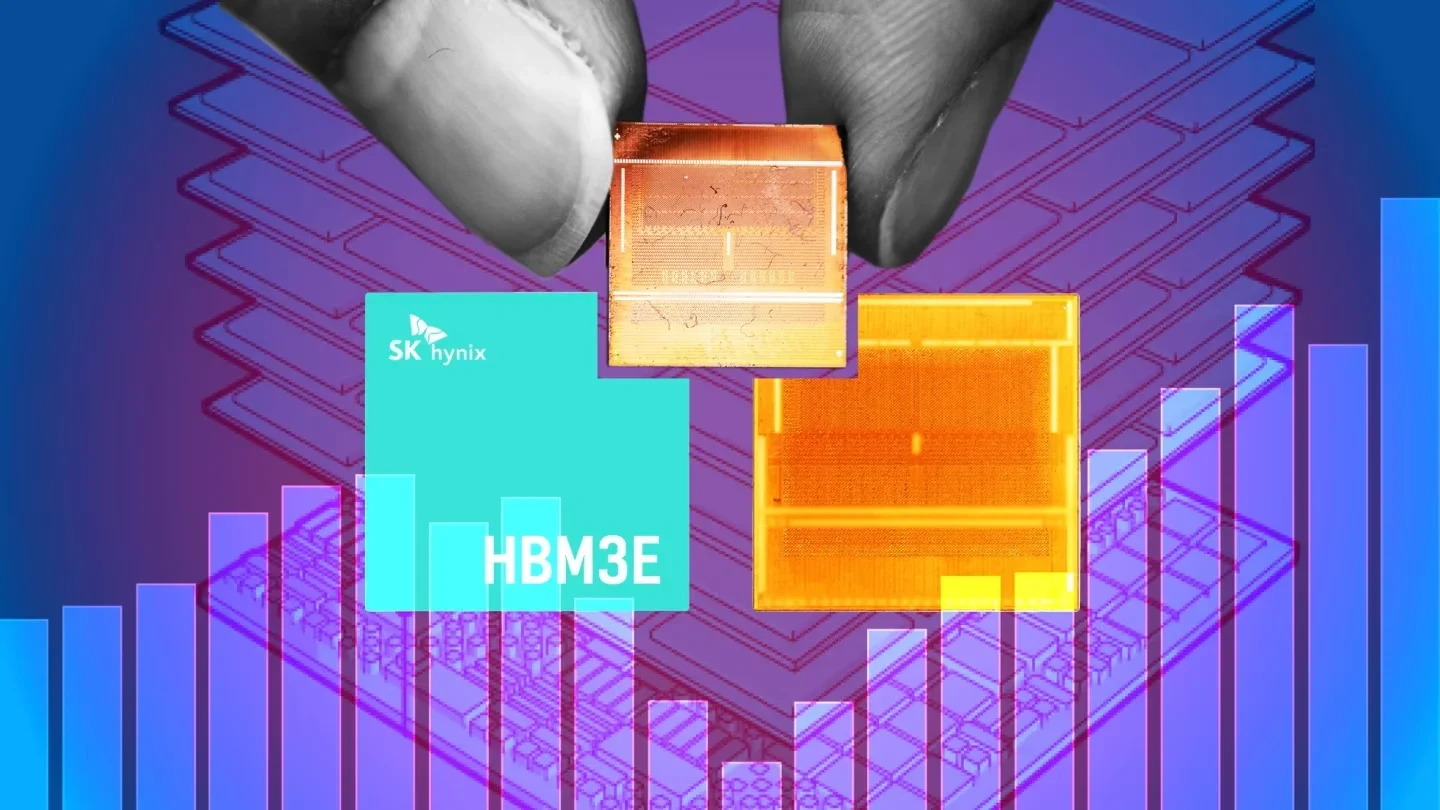

The global memory chip market is experiencing unprecedented growth as AI developers demand faster and larger-capacity hardware. Advanced models like GPT and Gemini rely heavily on high-bandwidth, high-capacity memory to process massive datasets efficiently. Industry leaders such as Samsung and SK Hynix are investing billions in next-generation HBM (High Bandwidth Memory) chips, designed to accelerate AI training and inference.

Opportunities and Challenges

While memory chips have become a strategic pillar of the AI race, the report leaves questions about whether supply can keep up with skyrocketing demand, especially given high production costs and supply chain vulnerabilities. With Asia and the U.S. competing fiercely, the future of the AI revolution will hinge on how effectively memory chip makers balance performance, scalability, and affordability.

📌 Summary

Memory chips are emerging as the new backbone of AI, powering advanced models like GPT. Firms such as Samsung and SK Hynix are betting big on HBM technology, though rising costs and supply challenges could shape the next phase of the AI race.