Description

🖼️ Tool Name:

DreamActor-M1

🔖 Tool Category:

Video Generation; also fits under Generative AI & Media Creation and Virtual & Augmented Reality.

✏️ What does this tool offer?

DreamActor-M1 is a state-of-the-art AI model developed for generating lifelike talking-head videos from a single image and an audio or text input. It creates expressive and realistic facial animations that mimic natural lip sync, eye movement, and subtle expressions — ideal for virtual characters, digital humans, and personalized video avatars.

⭐ What does the tool actually deliver based on user experience?

• Transforms a single image into a photorealistic speaking avatar

• Syncs facial expressions, eye gaze, and mouth movement to audio or text input

• Offers high temporal consistency and emotion control

• Outputs realistic video sequences suitable for films, games, education, and VTubers

• Great for storytelling, virtual assistants, dubbing, and content localization

🤖 Does it include automation?

Yes — DreamActor-M1 automates:

• Facial animation from static images

• Lip-sync and expression rendering based on audio or text

• Temporal smoothing for consistent frame transitions

• Multi-character generation from a batch of photos or inputs

💰 Pricing Model:

Currently research/demo release (free access with limited availability)

🆓 Free Plan Details:

• Public demo available via research platforms (e.g., Hugging Face or GitHub)

• Generate talking-head videos with uploaded image + text/audio

• Non-commercial usage only

💳 Paid Plan Details:

• No official paid plan yet — commercial licensing or API access expected in future research-to-product transition

🧭 Access Method:

• Research demo on platforms like Hugging Face or integrated into 3rd-party tools

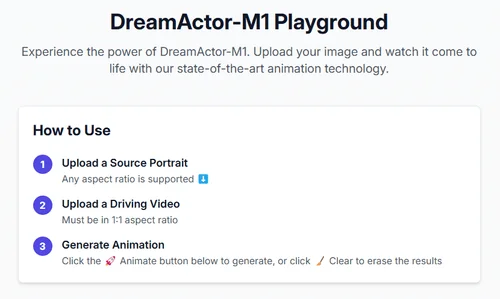

• Upload an image and audio/text input to generate video

• Downloadable video output for testing and experimentation

🔗 Experience Link: