Description

️ 🖼Tool name:

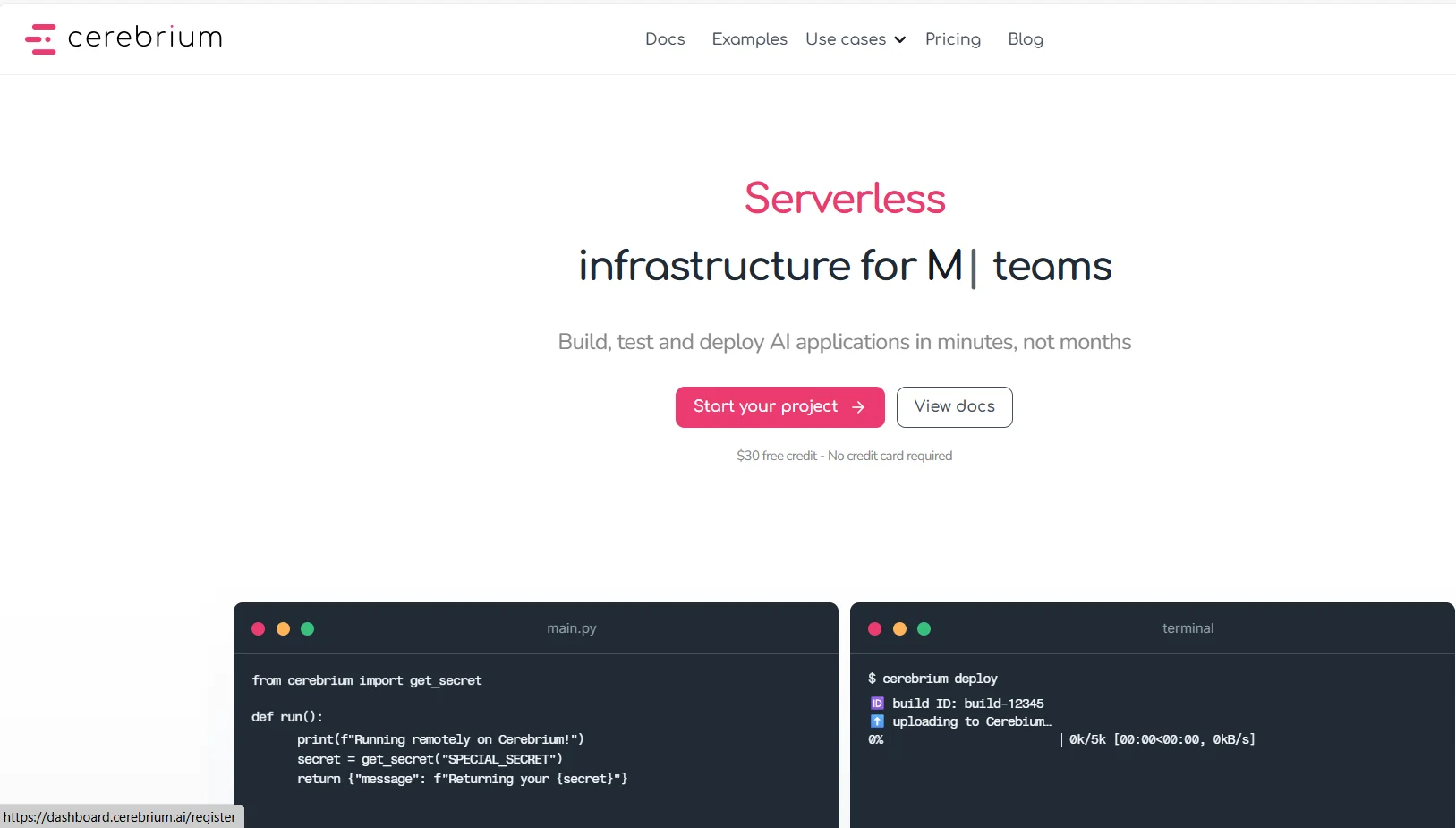

Cerebrium

🔖 Tool categorization:

DevOps, CI/CD and monitoring

API integrations and interfaces

Prediction and Applied Machine Learning

Intelligent automation and agents

Code-free workflows

️ ✏What does it offer?

Cerebrium is an advanced, serverlesscloud infrastructure platform that aims to run AI applications in real-time without the need to manage servers or deal with the complexities of DevOps.

It enables developers and enterprises to deploy AI models such as large language models, intelligent agents, and computer vision models globally with very low latency, with a flexible payment system based on actual consumption per second of operation.

⭐ What do you actually offer based on user experience?

In practice, Cerebrium provides a professional running experience for time-sensitive AI applications, as the platform handles the entire infrastructure, including:

Automatic scaling on demand

Distribute loads globally

Resource management and GPU triggering when needed

Low Latency

Easily deploy applications on a global scale

Developers just focus on writing code and building the product, while Cerebrium takes care of all the operation, performance, and scaling, making it suitable for real production applications.

🤖 Does it include automation?

Yes, Cerebrium includes high-level automation including:

Automatic scaling based on the number of requests

Dynamic resource management

Automatic starting and stopping of resources

Automatic billing based on actual consumption

All without manual server setup or direct infrastructure management.

💰 Pricing model:

Cerebrium is based on a subscription + pay-as-you-go model, where the cost of computing is calculated based on the actual running time of applications.

🆓 Details of the free plan (Hobby):

Price: $0 per month

Limited number of user seats

Limited number of published applications

Limited number of synchronized GPUs

Support via Slack and Intercom

Retention of logs for one day

Payment for computing consumption is separate

Considered partially freemium (subscription is free but operation is pay-as-you-go)

🎁 Trial credit:

Free $30 credit

Doesn't require credit card entry

Allows apps to actually run and fully experience the platform

💳 Details of paid plans:

Standard (about $100 per month + computing cost):

All the benefits of the free plan

More users

More published apps

Higher number of synchronized GPUs

Retention of logs for up to 30 days

Suitable for production-ready apps and startups

Enterprise (customized pricing):

Unlimited app deployment

Advanced GPU resources

Dedicated support

Unlimited log retention

Full infrastructure customization

Geared towards large enterprises and organizations

🧭 How to access the tool:

Directly from the cloud with API interfaces and a developer-ready runtime environment

🔗 Pricing link or official website:

https://www.cerebrium.ai/pricing