Description

🖼️ Tool Name:

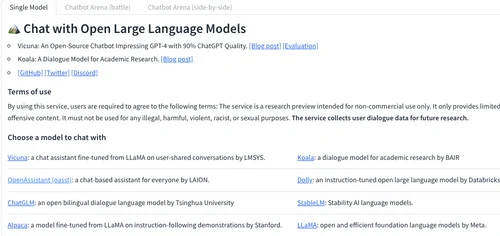

Chatbot Arena

🔖 Tool Category:

AI model benchmarking and evaluation platform; it falls under the category of Generative AI & Media Creation and Science & Research.

✏️ What does this tool offer?

Chatbot Arena is an open, crowdsourced evaluation platform where users can compare responses from different large language models (LLMs) without knowing which model generated which output. Developed by LMSYS (Large Model Systems Organization), it enables head-to-head comparisons through blind tests to benchmark AI chatbot performance.

⭐ What does the tool actually deliver based on user experience?

• Blind comparison between two AI chatbot responses

• Users vote for the better response without knowing the model

• Aggregated leaderboard ranking LLMs (e.g., GPT-4, Claude, Mistral, Gemini, etc.)

• Transparent, community-driven evaluation

• Real conversation examples and rating history

• Regularly updated with new models and versions

🤖 Does it include automation?

Yes —

• Random pairing of model responses

• Automatic anonymization of responses (model names hidden)

• Instant leaderboard updates as votes accumulate

• Response logging and evaluation for performance metrics

💰 Pricing Model:

Free

🆓 Free Plan Details:

• 100% free and open access

• Unlimited comparisons and voting

• Access to full leaderboard and model logs

💳 Paid Plan Details:

• None (academic and research-oriented, supported by LMSYS)

🧭 Access Method:

• Web App

🔗 Experience Link: